[cover image by Nicolas Bouvier]

Theranos has fallen apart, and with it, a lofty dream of “never having to say goodbye too soon.” Its founder is now looking at prison. Remote schooling is now seen as largely a bust. Instagram and Facebook are causing depression in teens. Purveyors of junk “science” and misinformation have let us down through a global pandemic, and tech has in some ways amplified the ability to misinform. Social media and cybercrime risk undermining the very foundations of government. Questions linger about the wisdom and safety of leading-edge gain of function research, as well as its potential, accidental role in the greatest world health crisis in our lifetime.

Is the tech news starting to get you down? Here are four reasons for hope.

1. Renewables have made staggering gains over the past decade, and usage is accelerating

Amidst all the climate doom, we need to recognize that we are, in fact, dramatically changing our ways. According to the New York Times, renewable energy sources now account for nearly 21 percent of the electricity the United States uses, up from about 10% in 2010. Notably, this trend has continued to run its course through both Democrat and Republican administrations. That’s astonishing progress in just a decade.

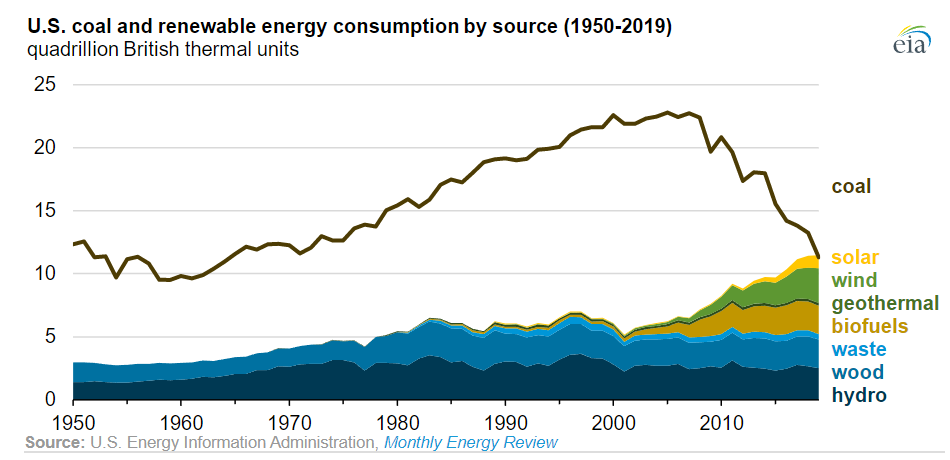

The US Energy Information Administration (EIA) tracks the energy consumption by source. As you can see, the areas of greatest growth are all renewables, and America’s reliance upon coal has plummeted over the past decade:

Solar power in particular is poised for near-exponential growth. And even though manufacturing and supply chain disruptions have held this growth back, we still added nearly 290 Gigawatts of capacity during 2021. A single gigawatt is enough to power either 700,000 homes, or about ten million light bulbs. The EIA expects solar to account for nearly half of all new US electricity-generating capacity in 2022. The IEA notes an acceleration of almost 60% in electricity-generation compared to the average rate of renewables over the past five years. That’s great news.

2. Up in the Sky! The James Webb Telescope Is Set to Reveal the Heavens

On Christmas Day 2021, NASA blasted $10 billion worth of the largest and most complex observatory ever built into space.

The James Webb Telescope is a large, infrared-based instrument, more than 100 times as powerful as the Hubbel Telescope. It will allow scientists to peer deep into the history of our universe like never before. Scientists hope it will resolve many unknowns related to our historical record of our universe, in particular, what happened in the first 400 million years after the Big Bang. There’s also the tantalizing possibility it might help identify some of the distant worlds in which alien life is feasible.

What’s more, it’s a much-needed sign of multinational cooperation. We should applaud the international partnership that made it possible; it’s a collaboration between NASA, the European Space Agency, and the Canadian Space Agency.

Webb has unfolded and will now travel 1 million miles, then calibrate its instruments. At this writing, all systems look nominal. By late March, researchers hope it will be capable of sending back its first images.

Webb’s largest feature is a tennis-court sized, ultralight sun-shield, which manages to reduce heat from the Sun more than a million-fold, while also blocking enough light for Webb’s observational instruments to peer deep into dark space. But there are many other innovations that make this possible. Watch this video and be awestruck by the ingenuity of humankind:

3. SpaceX’s Starlink Has Gone Live, a Dramatic Leap for Rural Connectivity

Speaking of space, but sticking much closer to terrestrial needs, there is now a network of low-orbit satellites that’s capable of delivering broadband Internet connectivity to pretty much anyone, anywhere on the planet.

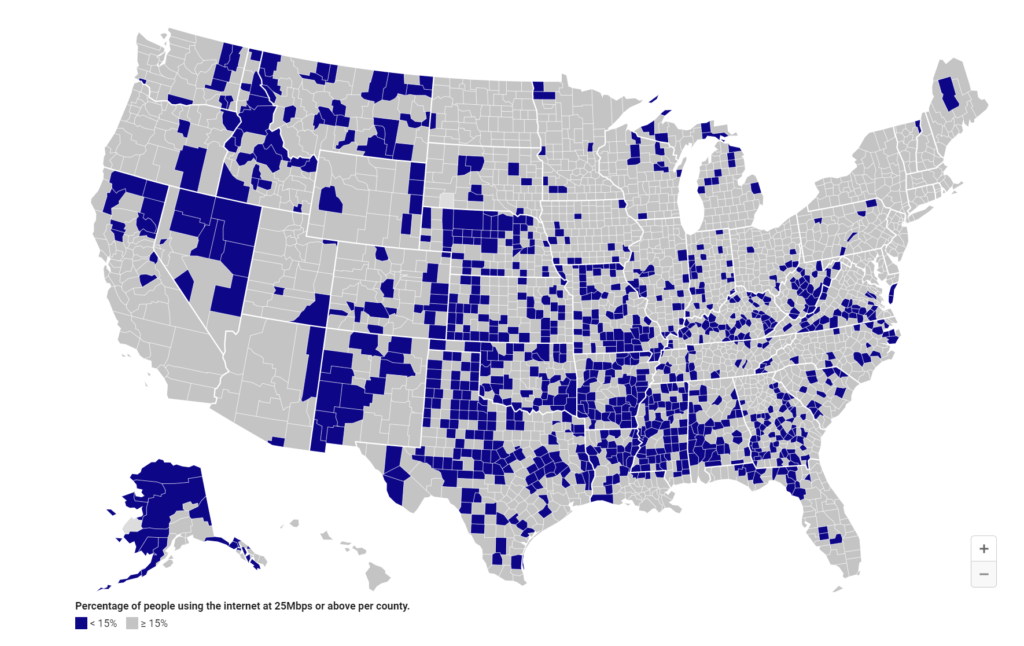

“So what?”, you say. After all, there have been past vendors of satellite connectivity. And if you’re a city or suburban dweller, you probably have had cable or fiber optic access for quite some time. First, try to remember the days of dialup. There are still huge swaths of the country and of course the world that don’t have broadband.

Yes, DISH network and others exist. But the difference here is that Starlink’s satellite network is sixty times closer to earth, which means this network provides a very fast, low-latency connection, fast enough to allow video and audio streaming. That is, you can make phone calls, do Zoom calls and host live video, where it was never possible before. For millions around the world, and for more of the planet than that which is currently reachable via broadband, it’ll be like upgrading from dialup speeds to broadband.

The opportunities for advancement in rural connectivity, forestry/desert/tundra research and data relay, and even for live feeds for underwater oceanographic communication and more — have advanced many-fold. There will soon be no place on the planet it’s not possible to get broadband connectivity, and that’s critical for a lot of researchers, first responders, farmers, healthcare facilities and remote communities.

Starlink’s goal is to sell high-speed internet connections to anyone on the planet. After years of development and $885 million in venture funding from the FCC in 2020, Starlink now has a fleet of nearly 2,000 satellites overhead.

There is a downside, however. Starlink’s large network of low-earth orbit satellites have the chance of being more visible from earth. As such, Starlink has also earned justified criticism and concern from environmentalists and astronomers.

SpaceX is aware of this and has piloted many mitigation steps to make them near-invisible, such as sun shades, which fold down to block light bounce-back, and orientation of the main “fin” of the satellite directly toward the sun. They claim their pilot tests are quite successful at mitigating this problem, and that their satellites are near-invisible to the naked eye. I file this in a “wait and see,” but think broadband-possible-everywhere is a major achievement with tremendous leverage for good.

4. Artificial Intelligence Cracks A Nagging, Vital Molecular Question: AlphaFold

It has been hailed as “the most important achievement in Artificial Intelligence ever.” No, they’re not talking about the terrific Netflix recommendations you’re getting during this pandemic. They’re giving this lofty praise to AlphaFold 2, a highly accurate model for protein folding. It’s not only the most important achievement in AI ever, its also the most important computational biology achievement ever.

Proteins are essential in just about all the important functions in our body: they help us digest our food, build our muscle, encode our genetic signals and more. Viruses are a small collection of genetic code (either DNA or RNA), surrounded by a protein coat.

In short, proteins are at the core of our biology. And we can influence proteins with nutritional intake, exercise, pharmaceuticals, enzymes and more. But a key question: how do proteins physically take shape at the molecular level, and how are they most likely to interact with biological entities like enzymes or pharmaceuticals, or other proteins? If we had a computer model that could predict how proteins might take shape, or fold, in such interactions, it could revolutionize the process for developing new therapeutics and diagnosing maladies.

The number of protein types is much larger than you may think. Depending upon cell type, there are between 20,000 and 100,000 different protein types in each human cell.

For nearly 50 years, advances in medicine and pharmaceutical research have been hampered by a key question: “How do proteins fold up?” In 2007, one scientist described this question as “one of the most important yet unsolved issues of modern science.”

Sure, we have computers. And from about 1970 through 2010, we’ve tried a “bottoms-up” modeling and brute-force approach. But understanding how proteins fold and unfold is fantastically difficult, because there are so many possibilities. Researchers have estimated that many proteins have on the order of 10^300 of possible options which would satisfy the constraints. “To frame that figure more vividly, it would take longer than the age of the universe for a protein to fold into every configuration available to it, even if it attempted millions of configurations per second,” writes Rob Toews in Forbes.

Scientists have observed for some time that the way proteins fold is not random, but couldn’t decipher any sensible pattern or model accurately. And for decades, researchers aimed with computers have attempted to model the underlying physics of proteins and amino acids to try to build some kind of predictive model. But the truth is, that after decades of such work, it fell short in its reliability.

And then, along came machine learning, and in particular, deep learning. Machine learning is a computational technique where, rather than trying to hand-write the procedural rules in a sort of “if this then that” bottoms-up way, you instead take well-scrubbed, well-marked data sets of inputs and labeled outputs, and use mathematical techniques to “train” a computer to decipher — or learn — underlying patterns. It’s rather like teaching a dog new tricks: repeatedly give them input (“sit”) and (when they do) also give them an output (“cookie”), and over and over again, and the dog learns to associate an input with an output.

Deep learning takes this training process one step further, and breaks down the simple “input-to-output” steps into many layers. This is akin to the way there are multiple layers of neurons in the brain taking raw input (e.g., aural neurons feeling varying wave pressure, other groups of neurons summing that up into phonetic chunks, other groups associating with getting a cookie or not), ultimately collectively leading to “learning.”

The training data for AlphaFold and AlphaFold2 came mainly from the Worldwide Protein Data Bank, which stores a massive archive of all known protein structures. The work derives from the AlphaGo project, funded by Google.

There’s a competition — the Critical Assessment of Protein Structure Prediction (CASP), held every other year. Here’s Forbes’ Toews:

AlphaFold’s performance at last year’s CASP was historic, far eclipsing any other method to solve the protein folding problem that humans have ever attempted. On average, DeepMind’s AI system successfully predicted proteins’ three-dimensional shapes to within the width of about one atom. The CASP organizers themselves declared that the protein folding problem had been solved.

Having a computational model for how proteins fold is sure to help much more rapid advancement in understanding how our biology works. Perhaps fewer tests on animals will be necessary, understanding of viruses will be more accurate, and effective therapeutics more rapidly discovered. It’s an incredibly leveraged discovery for humankind.

Take some time out of your day and watch this terrific overview video about AlphaFold 1.0. Then, note that the advancements even since then have been significant, so much so that the competition hosts now call the problem “solved”:

Read more: https://www.nytimes.com/2021/12/27/technology/the-2021-good-tech-awards.html