I’m on a parent advisory committee at my daughter’s school. The committee is taking a look at the school’s existing Computational Thinking curriculum and where it might want to head in the future.

Luckily for us, the faculty is already doing a very good job with the curriculum. So our role as advisers is to provide a sounding board and perhaps additional guidance regarding ways they might want to augment the program. Key topics not yet addressed much in the existing computing curriculum are Machine Learning, Deep Learning and Artificial Intelligence. These are pretty advanced fields, but becoming so essential to both the world we live in today and the one we will experience in the future. So what kinds of things might be useful to introduce and explore at the middle school (grades 6-8) and high school (grades 9-12) level?

What’s There, What Might Be Missing

At the school, they’re already introducing many central concepts of computing, like breaking down problems into smaller problems, basic algorithms, data modeling, abstraction, and testing. They’re teaching basic circuits, robotics, website creation, Javascript, HTML, Python basics and more.

In the current era, understanding data is absolutely essential. Topics like what makes a good dataset, how to gather data, ethics involved in data gathering, basic statistics, what the difference is between correlation and causation, how to “clean” a dataset, how to separate out a “training” and “validation” dataset, what signals we use to make an educated guess as to whether we should trust the data set or not, and more. Next, a basic understanding of how machine learning works is useful, because it can build a better picture both of what’s possible and what might be limitations. So understanding at a very basic level what we mean by terms like “deep learning”, “machine learning” and “artificial intelligence” can be helpful, because these terms come up in the news a great deal, and they might also make great career choices for many students (and also hint at fields ripe for disruption and potential decline.) One participant also pointed out that an understanding of current agile development practices is helpful. Agile is a development process and philosophy that emphasizes flexibility, all-team focus, constant feedback and continuous updates. It typically includes components like source control, short work bursts called “sprints” where everyone works toward some specific set of short/medium-term achievements, regular reviews and adaptability. Some of these tools and techniques (e.g., version control, “stand-up” reviews, “minimum viable product”, iteration and measurement (A/B testing), etc.) can actually be quite useful in group projects outside of the computing world. And simply browsing through Github and seeing what people are working on can open up a world of possibilities. So it’s good to know how to explore it, and that it’s right there and available to anyone with access to a computer. Another basic piece of the conceptual puzzle: Application Programming Interfaces (API.) APIs are how various computer services and devices talk among one another “across the wire,” and because they usually have tiny services behind them (“microservices”), they can best be thought of as the LEGO building-blocks of today’s applications and “Internet of Things”. My hunch is that once students fully understand that pretty much any of the API’s they run across can be composed together to form one big solution, that conceptual understanding unlocks a gigantic world of possibility. (Just a few of the many APIs in the machine learning field are listed below.)

Machine Learning Resources

As for actually getting your hands dirty and building out an intelligent algorithm or two inside or outside of class, the likelihood of success certainly depends upon the students and their interest. Are there canonical, interesting and accessible examples to introduce these topics? We in the committee (including the key faculty members) certainly think so. With that in mind, here’s a short running list of projects and videos in the computing world that might be interesting to educators and kids alike.

Introductory Resources

Introduces how repeated input data is used to train a machine to “predict” (classify) output based on input. Google Quick Draw This is a fun Pictionary-style game where it’s the computer that does the guessing. It might be a fun way to introduce questions of how it’s done — it seems magical.

Questions for class:

- How does it work?

- How do you think they built this? What data and tools might you need if you wanted to make your own?

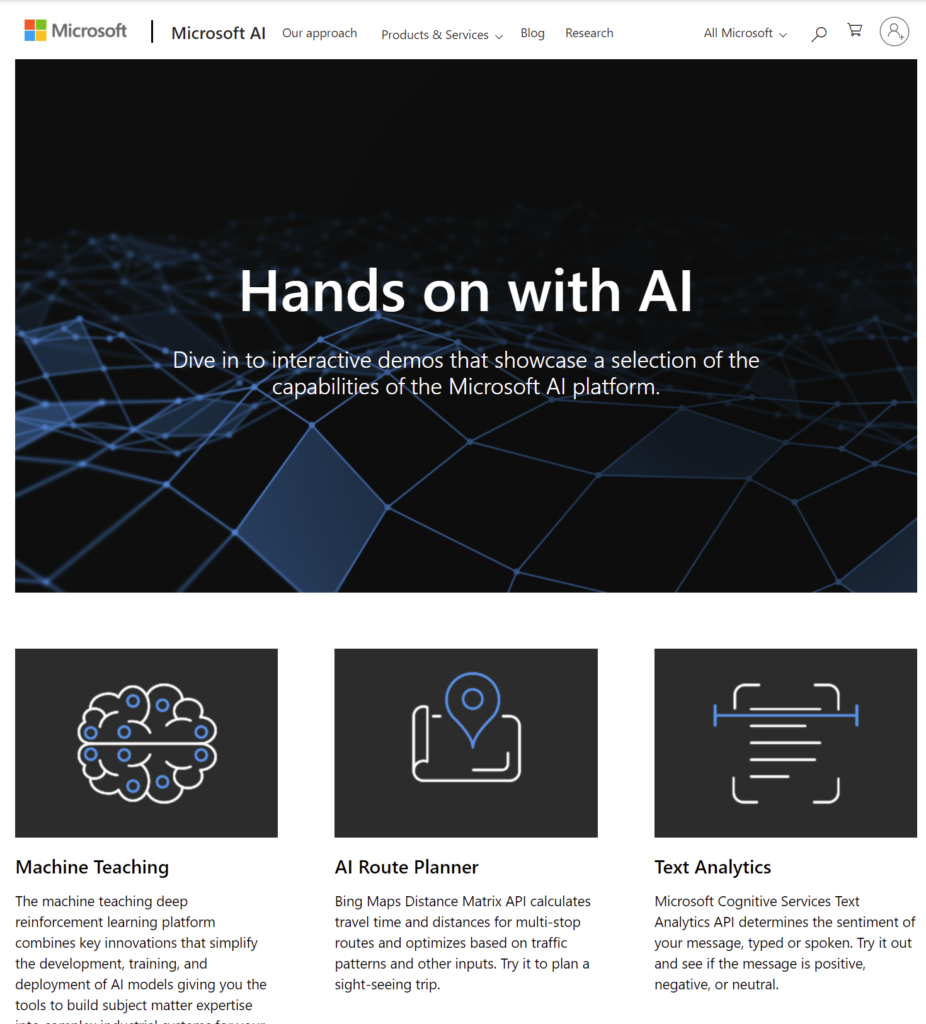

Microsoft AI Demos Area

Microsoft Corporation has several great interactive playgrounds. You can experiment with text analytics (including sentiment analysis), speech authentication, face and emotion recognition, route planning, language understanding and more.

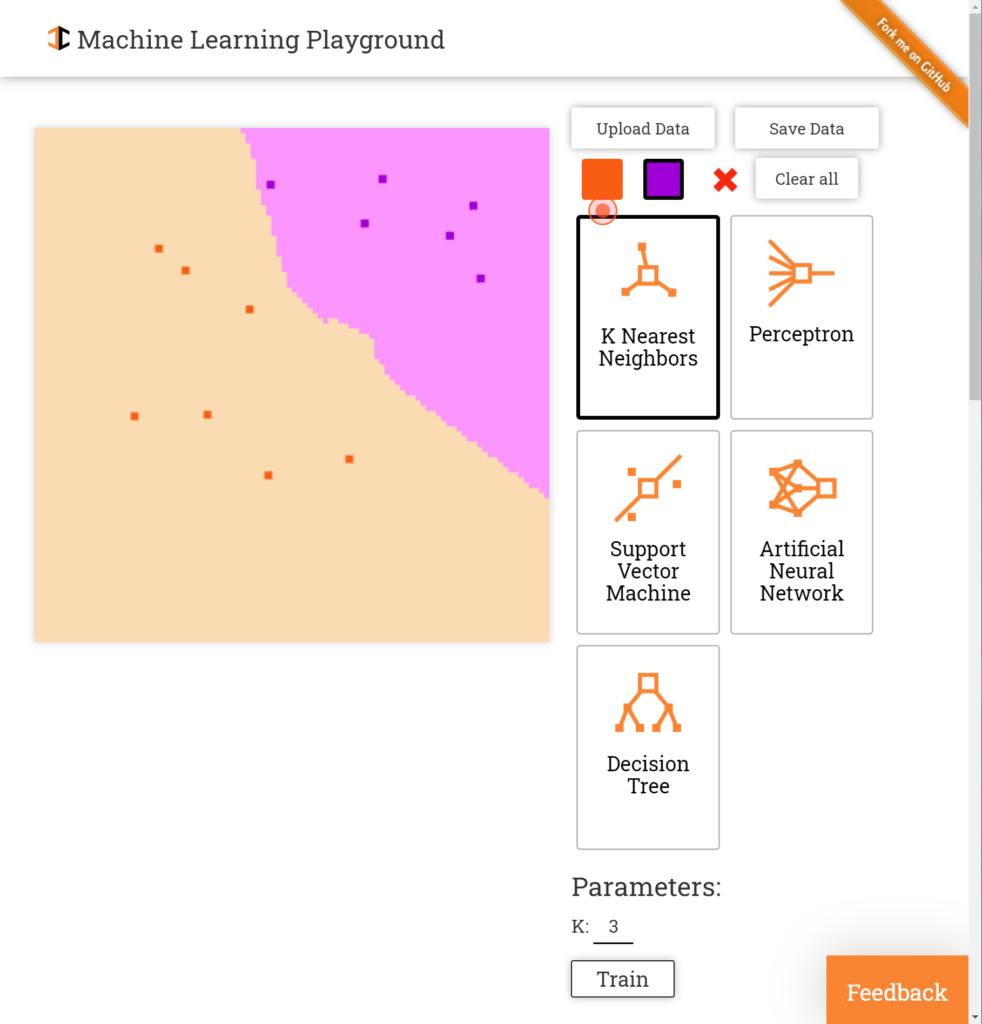

ML-Playground

A terrific playground to experiment with classification algorithms (k-means clustering, support vector machines and more) is at http://ml-playground.com/. You can plot two colors of points on a 2D (x, y) graph, and then apply a few algorithms to visually see how well they recognize “clusters” of like-points. Excellent and free.

ML Showcase

A fun meta-site that rolls up a list of machine learning resources is the ML Showcase. Check it out.

Amazon Machine Learning APIs

Amazon also has a very large set of useful machine learning APIs, but in my cursory look, they are short on “playground” demo areas that are in front of a paywall, so they might not be the best fit for a classroom at present writing.

Create Music with Machine Learning

Fun app: For those musically inclined, check out Humtap on iOS. Hum into your phone and tap the phone, and the AI will create a song based on your input.

Programming Tools

Machine Learning for Kids (Scratch + IBM Watson Free Level)Scratch is a great, free programming environment for kids which grew out of the Media Lab at MIT. This Machine Learning for Kids project is a very clever and surprisingly powerful extension to the Scratch Programming Language written by Dale Lane, an interested parent. It brings in the power of the IBM Watson engine to Scratch by presenting Machine Learning Building Blocks such as text classifiers and image recognizers. These visual drag-and-drop blocks can then be connected into a Scratch program. Fun examples include:

- An insult vs. compliments recognizer (video below)

- Rock-scissors-paper guessing game

- A dog vs. cat picture recognizer

I’ve wired up the compliments vs. insult recognizer on my own desktop, and it’s a very good overview of the promise and pitfalls when trying to build out a machine learning (classifier) model. I was impressed with the design and documentation of the free add-on, and it makes playing around with these tools a natural extension to any curriculum that’s already incorporating Scratch. I can imagine that for many middle schoolers and high schoolers, coming up with a list of insults to “train” the model would be quite fun.

Real-World Examples

Perhaps you’d like to begin with a list of real-world examples for machine learning? Examples abound:

- Voice devices like Amazon Alexa (Echo), Siri, Cortana and Google Assistant

- Netflix, Spotify and Pandora Recommendations

- Spam/ham email detection (how does your computer know it’s junk email?)

- Automatic colorization of B&W Images

- Amazon product recommendations

- Machine translation — Check out the amazing new Skype Translator

- Synthetic video

- Twitter, Facebook, Snapchat news feeds

- Weather forecasting

- Optical character recognition, and more specifically Zipcode recognition, one of the canonical examples (MNIST) Machines which recognize handwritten digits.

- Videogame automated opponents

- Self-driving technology

- Google Search (which results to show you first, text analysis, etc.)

- Antivirus software

What these solutions all have in common is a Machine Learning engine that ingests vasts amount of data, has known-good outcomes, a training set of data, a validation set of data, and a set of algorithms used to programmatically try to guess the best output given a set of inputs.

Data Science

I’ll likely do a separate set of posts on introducing Data Science to kids, but in the meantime, I wanted to mention one dataset here.

“Hello World” for Data Science: Titanic Survival

Machine learning is about learning from data, so Data Science is a direct cousin to (and overlaps heavily with) both Machine Learning and Deep Learning. A machine learning algorithm is only as good as its training and validation data, and students need to become familiar with how to recognize valid vs. invalid data, what data is the right kind to include vs. exclude, how to clean and augment data and more. Tools of the trade vary, but the Python data analysis stack (such as the libraries pandas, numpy and scikit-learn) are becoming a lingua-franca of the field.

There are several datasets that are interesting ways to introduce data analysis, but one of my favorites is the: Titanic Dataset (High Schoolers: Data Science, Predictions and AI): Few events in history can match the drama, scale and both social and engineering lessons contained in the Titanic disaster. Would you have survived? What would have been your odds? What is the difference between correlation and causation? You can actually make a prediction as to what the survival likelihood was of a passenger based on their class of service, gender, age, point of embarkation and more. While not strictly “machine learning” per se, this dataset introduces the basic building block of machine learning: data, features, and labeled outcomes.

By doing this exercise, you lay the groundwork for much better insight into how machines can use lots of data, and features in that data, to begin to make predictions. Machine learning is about training computers to recognize patterns from data, and this is a great “Hello World” for data science. (For those schools who want to introduce topics of privilege and diversity and of an era, it’s also avenue to discuss those social issues using data.)

Machine Learning Explained Simply

Terrific Hands-on Lab (Intermediate Learners): Google Machine Learning Recipes

What is Machine Learning? (Google)

This superb and accurate video takes the classic MNIST dataset (which is about getting the computer to correctly “recognize” handwritten digits) and walks through how it’s done. About 3/4 of the way through, it starts getting into matrix/vector math, which is likely beyond most high school curricula, but it’s very thorough in its explanation:

A Pioneer of Modern Machine Learning

Advanced (but Fascinating) Videos and Projects

Got advanced students interested in more? There’s so much out there. I’m currently going through the amazing, free Fast.ai course. Really good overview, and includes fun recognizers like a cats vs. dogs recognizer, text sentiment analysis and more. There are fun projects on Github like DeOldify, which attempts to programmatically colorize Black & White photos.

What do (Convolutional) Neural Networks “see”?

Neural networks “learn” to pay attention to certain kinds of features. What do these look like? This video does a nice job letting you see into the “black box” of on type of neural network recognizer:

Neural Style Project

It took a little while to set up on my machine, but the Neural Style project is pretty amazing. If you’ve used the app called “Prisma,” you know that it’s possible to take an input photograph and render it in the style of a famous painting. Well, it works with a neural network, and code that does basically the same thing is available on the web in a couple of projects, one of which is neural-style. Fair warning: Getting this up and running is not for the feint of heart. You’ll need a high-powered computer with an NVIDIA graphics card (GPU) and several steps of setup (it took about 30 minutes to get running on my machine.) But when you run it, you can play around with input and output that looks like this:

Input

Taking this photograph I took, and getting the neural-style project to render it with a Van Gogh style:

Great AI Podcast

The AI Podcast has a lot of great interviews.

Generative Adversarial Networks (GANs)

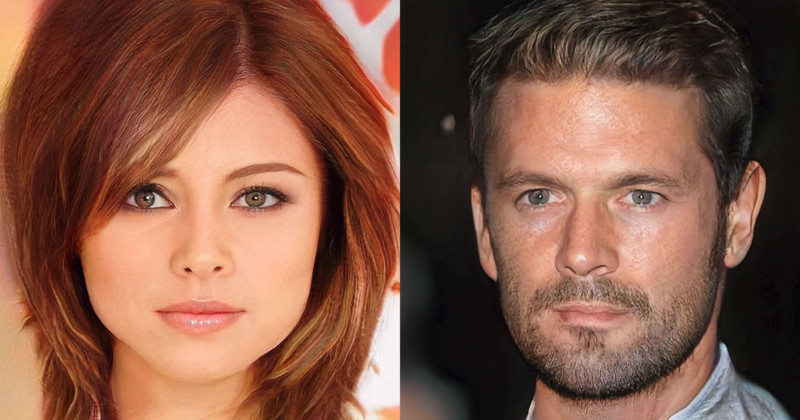

One of the most interesting things going on in machine learning these days are the so-called Generative Adversarial Networks, which use a “counterfeiter vs. police” adversarial contest to train an algorithm to actually synthesize new things. It’s a very recent idea (the research paper by Goodfellow et al which set it off was only published in 2014.) The idea is that you create a game of sorts between two algorithms: a “Generator” and a “Discriminator”. The Generator can be thought of as a counterfeiter, and the discriminator can be thought of as the “police”.

Basically, the counterfeiter tries to create realistic-looking fakes, and “wins” when it fools the police. The police, in turn, “win” when they catch the generator in the act. Played tens of thousands — even millions of times — these models eventually optimize themselves and you’re left with a counterfeiter that is pretty good at churning out realistic-looking fakes. Check out the hashtag #BigGAN on Twitter to see some interesting things going on in the field — or at the very least, some very strange images and videos generated by computer.

There’s a great overview of using a GAN to generate pictures of people who don’t exist. (Tons of ethical questions to discuss there, no?) For instance, these two people do not exist, but rather, were synthesized from a GAN which had ingested a lot of celebrity photos:

Another researcher used a GAN to train a neural network to synthesize photos of houses on hillsides, audio equipment and tourist attractions that do not exist in the real world: homes on a hillside which do not exist in the real world:

audio equipment which does not exist in the real world:

tourist attractions which do not exist in the real world :

And how about this incredible work from the AI team at University of Washington?

Interesting Topic for Mature Audience

One of the pitfalls of machine learning and AI is that bad data used in training can lead to “learned” bad outcomes. An emblematic story that might be of interest to some high-school audiences which illustrates this is the time that Microsoft unleashed Tay, a robot which learned, and was quickly trained into a sex-crazed Nazi. On second thought…

Suggestions Welcome

Do you have suggestions for this list? Please be sure to add them in the comments section below.