In the urgent debate around Seattle’s homelessness crisis, many articles (such as this otherwise great one in Crosscut) cite the statistic that 35% of those who are homeless in the Seattle region have some level of substance abuse. It’s often a very central part of the framing, especially by those who wish to portray substance abuse as a relatively low contributor to the problem. Among other things, presenting that statistic at face-value implies that presumably, 65% of homeless individuals don’t have any kind of substance abuse issue, so perhaps addiction is a less significant reason that causes, follows, accompanies or perpetuates homelessness.

Now, 35% is of course much higher than the national average for housed/unhoused combined, so even that level of just over a third should be alarming and worthy of investment in treatment services and treatment-on-demand, and even thoughtful consideration of increasing requirements on those who choose not to enter treatment. This is because not getting help is a danger both to themselves and in some cases, to others.

But here, I’m concerned with the presentation of that statistic itself and central reliance upon it without any context.

Too often left unmentioned is this key asterisk: The 35% statistic is based entirely on self-reporting.

35%’s Origin

The 35% figure comes from the very worthwhile annual “Point in Time” report, gathered by volunteers, which King County calls the Count Us In Report. Here is the key paragraph summarizing the oft-cited statistic:

Approximately 70% of Count Us In Survey respondents reported living with at least one health condition. The most frequently reported health conditions were psychiatric or emotional conditions (44%), post-traumatic stress disorder (37%), and drug or alcohol abuse (35%). Twenty-seven percent (27%) of respondents reported chronic health problems and 26% reported a physical disability. Over half (53%) of survey respondents indicated that they were living with at least one health condition that was disabling, i.e. preventing them from holding employment, living in stable housing, or taking care of themselves.

King County Count Us In Point-In-Time Report, 2018

Survey’s Great. Interpretation? Often, Not So Much.

Please understand what I’m saying, and do not misrepresent it: The Point In Time Survey does not in any way misrepresent or hide information about what this statistic represents. The report itself characterizes it accurately.

But other articles and online discussions and political polemics which cite this statistic and accept it as the ground truth often do. Note, for instance, the framing in that Crosscut article, at least at this writing. The lead sentence of this article reads: “Contrary to what some may assume, most people living homeless do not have a substance use disorder (SUD): it’s about 35%, according to a recent local survey.”

No, that’s not what the Count Us In Report says.

It is not accurate to say that 35% of homeless individuals have substance abuse issues; it is accurate that 35% say they do.

I am in no way critical of the considerable effort to gather and report this data. I’m very supportive of it, and I applaud the many volunteers who give of their time do it. It provides extremely useful snapshots-in-time for things like total counts, vehicular counts, age, gender, regional comparisons, trends and more. That kind of data — i.e., pure counts of things which can be clearly observed and independently verified — is pretty reliable.

And what people self-report is also very useful in a way as well. I’m a fan of collecting it.

The problem I have is when essays and analyses and endless online debates blindly rely upon the “35% of homeless individuals in Seattle have some form of substance addiction” figure without stating — or in some cases, even seemingly knowing — what that figure represents.

35% of homeless individuals surveyed report that they have drug or alcohol abuse as a health issue.

What the 35% means is that, when asked one night by a volunteer stranger whether they have a drug or alcohol issue, 35% of those who are homeless respond “Yes, I do.”

We can and should ask: what is the likely accuracy of that number? Would that tend to undercount, accurately count, or overcount reality? Statistically speaking, does it tend to generate a lot of false negatives, little error, or false positives?

Intuitively, it would seem that highly likely that this is an undercount. After all, what is the incentive for someone who is not addicted to answer “Yes, I am.” Conversely, for those who are addicted — to opioids, meth, alcohol — it’s such a truism that there is denial about addiction that it’s become a cliche, at least about alcoholics in particular. To assume that 35% represents reality is to assume that denial, when it comes to admitting substance abuse to strangers, is nonexistent.

The City’s Own Lawsuit Against Purdue Pharma

Even the City itself has data which is very hard to square with a 35% addiction rate figure.

In the City’s own case against Purdue Pharma, it says: “Seattle’s Navigation Team (…) estimates that 80% of the homeless individuals they encounter in challenging encampments have substance abuse disorders.”

Seattle v Purdue 1:17-md-2804-DAP p8 par18

Now, this is only for those “challenging encampments” encountered by the Navigation Team, and it doesn’t count those homeless individuals living in private or publicly funded shelter. But note too that the lawsuit is focused on opioid abuse, not the broader alcohol and meth (fastest growing) addiction issues. It is very hard to square the 80% substance-abuse figure in this subsegment with an overall 35% rate, unless one assumes that the other segments have dramatically lower than US average level of substance abuse, essentially 0% substance abuse of any kind, which seems unlikely.

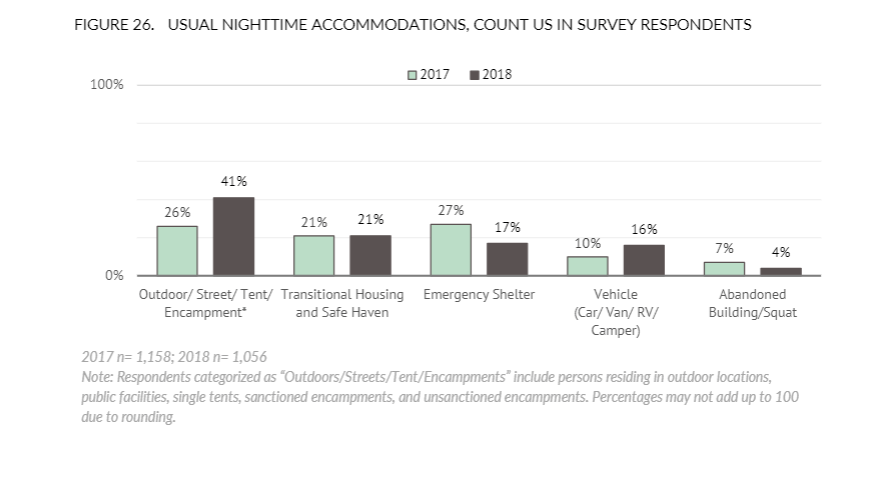

Page 32 of the Count Us In Report, embedded below, shows 41% of the homeless population living in unsheltered tents/encampment/streets and another 16% living in vehicles, with both segments growing rapidly:

Further, the Seattle Navigation Team reports than in cleanups of camps, about 80% of them have needles and other physical evidence of substance addiction. That’s of the needles not taken and/or disposed of prior to the final closure, and left behind.

And in the Seattle Is Dying piece, it was very anecdotal and not at all scientific, but it sure did seem like those people interviewed with direct knowledge — the reporters and first responders and even at least one individual who has spent years in either encampments or have reported on them claim a much higher level than just 35% — most saying “100% or close to 100%.”

How can the self-reporting be so low, but these datapoints above be so high?

Do Studies Measure Accuracy of Self-Reporting? Yes!

Surely, this problem has been studied before. What’s the accuracy rate of self-reporting when it comes to substance abuse? Are there studies which ask people and then, say, do lab tests to verify truthfulness?

Initially, I ran across several studies that showed a shocking 89%+ accuracy rate overall, and was quite surprised by them. That doesn’t match my initial intuition. That is, when some stranger asks you about potentially illegal activity, or activity that might make you ineligible for services, or activity that might cause incarceration or at a minimum carries at least some stigma to many, that you’d answer honestly ~90% of the time? Seems odd. Can that really be true?

False Negatives are Very High for Those Not Seeking Treatment

But then I read The Impact of Non-Concordant Self-Report of Substance Use in Clinical Trials Research, which really made total sense to me, and resolved the basic question. There are two ways this ~89% accuracy estimate is an overestimate in situations like the Point In Time overnight counts.

Essentially, the super-high 89+% overall accuracy rates are generally for studies either of (a) people who have decided to seek treatment — e.g., they’re already in the lab and know or think a test is about to happen OR (b) studies which blend the overall population, which has an overwhelming number of non-addicts (90.4% of Americans haven’t used hard drugs in the past month, according to NIH.)

That is, for the individuals in former group of studies (those who are seeking treatment), there’s a very strong incentive and desire to be accurate — and knowledge they’ll likely be lab-tested on it anyway. And for the latter group, the overwhelmingly high number of accurately-answering subjects in the population (i.e., those who have no incentive whatsoever to create false positives) swamps the weighted average accuracy for the group as a whole and brings the forecast accuracy artificially higher.

Toward a Better Estimate

I’m no expert, but it feels like much more likely that 35% — the self-reported rate — is the floor of the accurate normal distribution range, and an implausible one at that. In other words, the notion that 35% represents reality is highly unlikely. Accepting that statistic as reality essentially implies that you believe that 100% of all respondents will answer a question like “Do you have a substance abuse health problem?” honestly (unless you believe that those who aren’t addicted will somehow decide to state that they are, in large numbers), and no study suggests that they do.

There are almost zero pressures on the true number being lower than what is self-reported, and significant evidence that the false-negative rate can be at least 30-50%+. And the true range is very sensitive to your view on the level of error in reporting. If you think that the error rate on the sample is 50% — and again, it is intuitively entirely due to false negatives — you end up with a true substance abuse rate in the surveyed population of 70%, not 35%.

To me, if you gross-up the self-reported 35% estimate by a more reasonable factor given the likelihood of false negatives, you end up with a more plausible, more aligned, and much more compatible with the City’s own lawsuit true addiction range of 45% (that’s quite conservative) to 70%+.

When talking with one acquaintance whose (multiple) loved ones have directly suffered from addiction, service providers and doctors generally have told them that self-reporting is usually off by a factor of 2 to 3x. (Solely relying upon this anecdotal feedback, even 70% would be low.)

At a minimum, when people cite the 35% statistic, I think we should encourage an asterisk that this is self-reported data — what people say about themselves.

Update, October 8, 2019:

The Los Angeles Times pursued its own analysis of Los Angeles, and compares the counts to self-reporting, pretty much fully agreeing with the estimates above: https://www.latimes.com/california/story/2019-10-07/homeless-population-mental-illness-disability?fbclid=IwAR0JZSWG4N2791Gour_KYjh9ZSuBpTXmAjHgSRJI71lPegpmSUWezzabcqE