I’ve been dusting off my machine learning/data-science skills by diving into Python, which has become a lingua franca (along with R) of the data analysis world. Python’s libraries for data analysis and visualization are really superb and can make quick work of complex data analysis tasks.

Sentiment Analysis

Today, it’s possible to use computers to quantify, with reasonable accuracy, the emotional “sentiment” of an utterance, determining if it is fundamentally positive, negative or neutral in nature. This is called “sentiment analysis,” and is a very fertile area for some really interesting projects.

Looking around for basic topics to put new Python learnings to the test, I thought it’d be fun to take a look at the Twitter sentiment and “virality” of two famous tweeters: realDonaldTrump and hillaryclinton.

Using basic data science techniques, it seemed possible to know answers to questions such as:

- Which of the two is currently more negative on Twitter?

- Are Trump’s Twitter followers more “viral” than Clinton’s?

- Under what conditions do their followers tend to like and retweet their messages?

- When one of them goes negative, how do their followers respond?

- etc.

[Update: I’ve just discovered jupyter, which would be the ideal platform to write this up in.]

Caveats

This is only the result of about 90 minutes of work, meant as a fun “throwaway” project to help me learn the frameworks. This is not university-level, peer-reviewed research. There are numerous caveats, and I caution against over-interpreting the data.

The two biggest caveats: First, the problem of Twitter bots is real, and well known. Twitter is fighting them, but it’s impossible to say with the data below just how much that impacts the data. Still, the results of the data below suggests to me that Trump probably has more bots that just retweet and like what he says, regardless of content.

Second, note that I’m using only the most basic “sentiment analysis” library: textblob. This is prone to false positives, as it looks word-by-word and does not accurately measure sentiments in the case of, for instance, double-negatives. This is experimental, and meant solely for learning and fun. Several tests I’ve run across have shown it to only be about 83–85% accurate.

Results

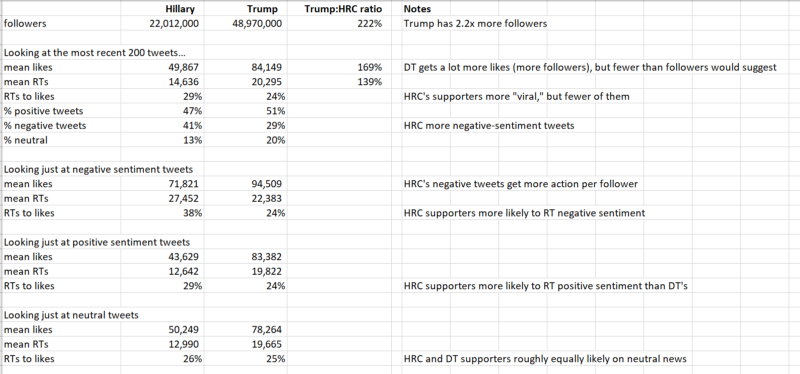

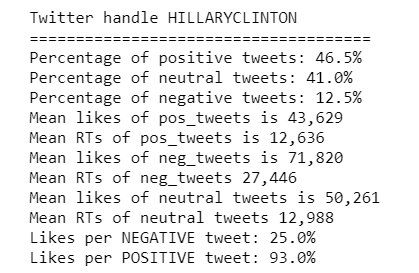

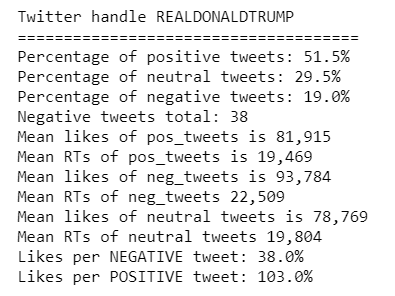

Nevertheless, here’s the result of the analysis as of March 13, 2018, looking at their last 200 tweets:

What jumped out at me

- Trump has 2.2x as many followers as HRC. OK, duh. We don’t need python for that. But this is important to keep in mind for the table above, so it’s first off the bat. Said another way, if the audiences were relatively equal, you’d expect similar ratios to retweets, likes, and responses to negative or positive expressions.

- HRC’s followers are significantly more “viral,” on a per-capita basis — they like and retweet on a “per-capita” basis much moreso than do Trump’s. While Trump has 2.2x as many followers, he only has 69% more “likes” from his followers; HRC’s followers are about twice as active, in general in liking/retweeting what she writes. Looking at her last 200 tweets:

- Trump’s followers are equally likely to RT Trump regardless of whether what he writes is positive, negative or neutral. Another way to think of this is that no matter what Trump says, a sizable percentage of his core followers will retweet it. (One plausible theory — see “bots” above. He may well have more of them.)

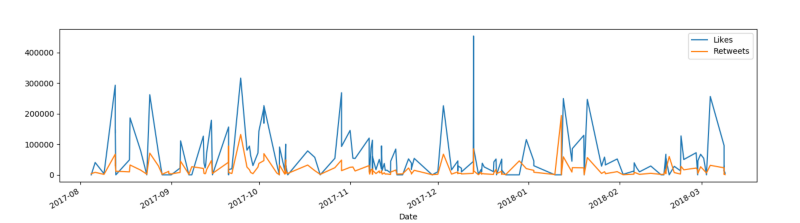

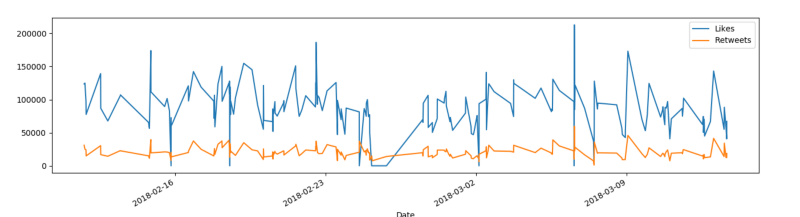

- Note the substantial dropoff of retweets for Trump (and not for Clinton) around the end of February and early March, 2018. One hypothesis (I’ve not yet checked) is that this was due to a purge of bots by Twitter.

Most Retweeted Tweet, Trump (in past 200):

Lowest rated Oscars in HISTORY. Problem is, we don’t have Stars anymore — except your President (just kidding, of course)!

Most Retweeted Tweet, Clinton (in past 200):

RT @BillKristol: Two weeks ago a 26-year old soldier raced repeatedly into a burning Bronx apartment building, saving four people before he died in the flames. His name was Pvt. Emmanuel Mensah and he immigrated from Ghana, a country Donald Trump apparently thinks produces very subpar immigrants.

https://twitter.com/BillKristol/status/951637572576477184?utm_campaign=crowdfire&utm_content=crowdfire&utm_medium=social&utm_source=twitter

Code

# General:

import tweepy # To consume Twitter's API

import pandas as pd # To handle data

import numpy as np # For number computing

# For plotting and visualization:

from IPython.display import display

import matplotlib.pyplot as plt

import seaborn as sns

#%matplotlib inline

from textblob import TextBlob

import re

# We import our access keys:

from credentials import * # This will allow us to use the keys as variables

# API's setup:

def twitter_setup():

"""

Utility function to setup the Twitter's API

with our access keys provided.

"""

# Authentication and access using keys:

auth = tweepy.OAuthHandler(CONSUMER_KEY, CONSUMER_SECRET)

auth.set_access_token(ACCESS_TOKEN, ACCESS_SECRET)

# Return API with authentication:

api = tweepy.API(auth)

return api

# We create an extractor object:

extractor = twitter_setup()

# We create a tweet list as follows:

tweets = extractor.user_timeline(screen_name="hillaryclinton", count=200)

print("Number of tweets extracted: {}.n".format(len(tweets)))

def clean_tweet(tweet):

'''

Utility function to clean the text in a tweet by removing

links and special characters using regex.

'''

return ' '.join(re.sub(r"(@[A-Za-z0-9]+)|([^0-9A-Za-z t])|(w+://S+)", " ", tweet).split())

def analyze_sentiment(tweet):

'''

Utility function to classify the polarity of a tweet

using textblob.

'''

analysis = TextBlob(clean_tweet(tweet))

if analysis.sentiment.polarity > 0:

return 1

elif analysis.sentiment.polarity == 0:

return 0

else:

return -1

# We print the most recent 5 tweets:

print("5 recent tweets:n")

for tweet in tweets[:5]:

print(tweet.text)

print()

# We create a pandas dataframe as follows:

data = pd.DataFrame(data=[tweet.text for tweet in tweets], columns=['Tweets'])

# We display the first 10 elements of the dataframe:

display(data.head(10))

data['len'] = np.array([len(tweet.text) for tweet in tweets])

data['ID'] = np.array([tweet.id for tweet in tweets])

data['Date'] = np.array([tweet.created_at for tweet in tweets])

data['Source'] = np.array([tweet.source for tweet in tweets])

data['Likes'] = np.array([tweet.favorite_count for tweet in tweets])

data['RTs'] = np.array([tweet.retweet_count for tweet in tweets])

# We create a column with the result of the analysis:

data['SA'] = np.array([ analyze_sentiment(tweet) for tweet in data['Tweets'] ])

# We display the updated dataframe with the new column:

display(data.head(10))

# We extract the mean of lengths:

mean = np.mean(data['len'])

print("The average length of tweets: {}".format(mean))

# We extract the tweet with more FAVs and more RTs:

fav_max = np.max(data['Likes'])

rt_max = np.max(data['RTs'])

fav = data[data.Likes == fav_max].index[0]

rt = data[data.RTs == rt_max].index[0]

# Max FAVs:

print("The tweet with more likes is: n{}".format(data['Tweets'][fav]))

print("Number of likes: {}".format(fav_max))

print("{} characters.n".format(data['len'][fav]))

# Max RTs:

print("The tweet with more retweets is: n{}".format(data['Tweets'][rt]))

print("Number of retweets: {}".format(rt_max))

print("{} characters.n".format(data['len'][rt]))

# Mean Likes

meanlikes = np.mean(data["Likes"])

meanRTs = np.mean(data["RTs"])

print("Mean likes is {}".format(meanlikes))

print("Mean RTs is {}".format(meanRTs))

# Time Series

tlen = pd.Series(data=data['len'].values, index=data['Date'])

tfav = pd.Series(data=data['Likes'].values, index=data['Date'])

tret = pd.Series(data=data['RTs'].values, index=data['Date'])

# Lengths along time:

# tlen.plot(figsize=(16,4), color='r')

# Likes vs retweets visualization:

tfav.plot(figsize=(16,4), label="Likes", legend=True)

tret.plot(figsize=(16,4), label="Retweets", legend=True)

plt.show()

rts2likes = pd

pos_tweets = [ tweet for index, tweet in enumerate(data['Tweets']) if data['SA'][index] > 0]

neu_tweets = [ tweet for index, tweet in enumerate(data['Tweets']) if data['SA'][index] == 0]

neg_tweets = [ tweet for index, tweet in enumerate(data['Tweets']) if data['SA'][index] < 0]

print("Percentage of positive tweets: {}%".format(len(pos_tweets)*100/len(data['Tweets'])))

print("Percentage of neutral tweets: {}%".format(len(neu_tweets)*100/len(data['Tweets'])))

print("Percentage of negative tweets: {}%".format(len(neg_tweets)*100/len(data['Tweets'])))

# Mean Likes of Positives

pos_tweet_likes = [ tweet for index, tweet in enumerate(data['Likes']) if data['SA'][index] > 0]

pos_tweet_rts = [ tweet for index, tweet in enumerate(data['RTs']) if data['SA'][index] > 0]

pos_likes_avg = np.mean(pos_tweet_likes)

pos_RTs_avg = np.mean(pos_tweet_rts)

print("Mean likes of pos_tweets is {}".format(pos_likes_avg))

print("Mean RTs of pos_tweets is {}".format(pos_RTs_avg))

# Mean Likes of Negatives

neg_tweet_likes = [ tweet for index, tweet in enumerate(data['Likes']) if data['SA'][index] < 0]

neg_tweet_rts = [ tweet for index, tweet in enumerate(data['RTs']) if data['SA'][index] < 0]

neg_likes_avg = np.mean(neg_tweet_likes)

neg_RTs_avg = np.mean(neg_tweet_rts)

print("Mean likes of neg_tweets is {}".format(neg_likes_avg))

print("Mean RTs of neg_tweets {}".format(neg_RTs_avg))

# Mean Likes of Neutrals

neutral_tweet_likes = [ tweet for index, tweet in enumerate(data['Likes']) if data['SA'][index] == 0]

neutral_tweet_rts = [ tweet for index, tweet in enumerate(data['RTs']) if data['SA'][index] == 0]

neutral_likes_avg = np.mean(neutral_tweet_likes)

neutral_RTs_avg = np.mean(neutral_tweet_rts)

print("Mean likes of neutral tweets is {}".format(neutral_likes_avg))

print("Mean RTs of neutral tweets {}".format(neutral_RTs_avg))

Acknowledgements

Thanks much to this informative article by Rodolfo Farro for the jumpstart.